Modern software usually involves running tasks that invoke services. If the service is subjected to intermittent heavy loads, it can cause performance or reliability issues. If the same service is utilized by a number of tasks running concurrently, it can be difficult to predict the volume of requests to which the service might be subjected at any given point in time.

It is possible that a service might experience peaks in demand that cause it to become overloaded and unable to respond to requests in a timely manner. Flooding a service with a large number of concurrent requests may also result in the service failing if it is unable to handle the contention that these requests could cause.

The Asynchronous Queue

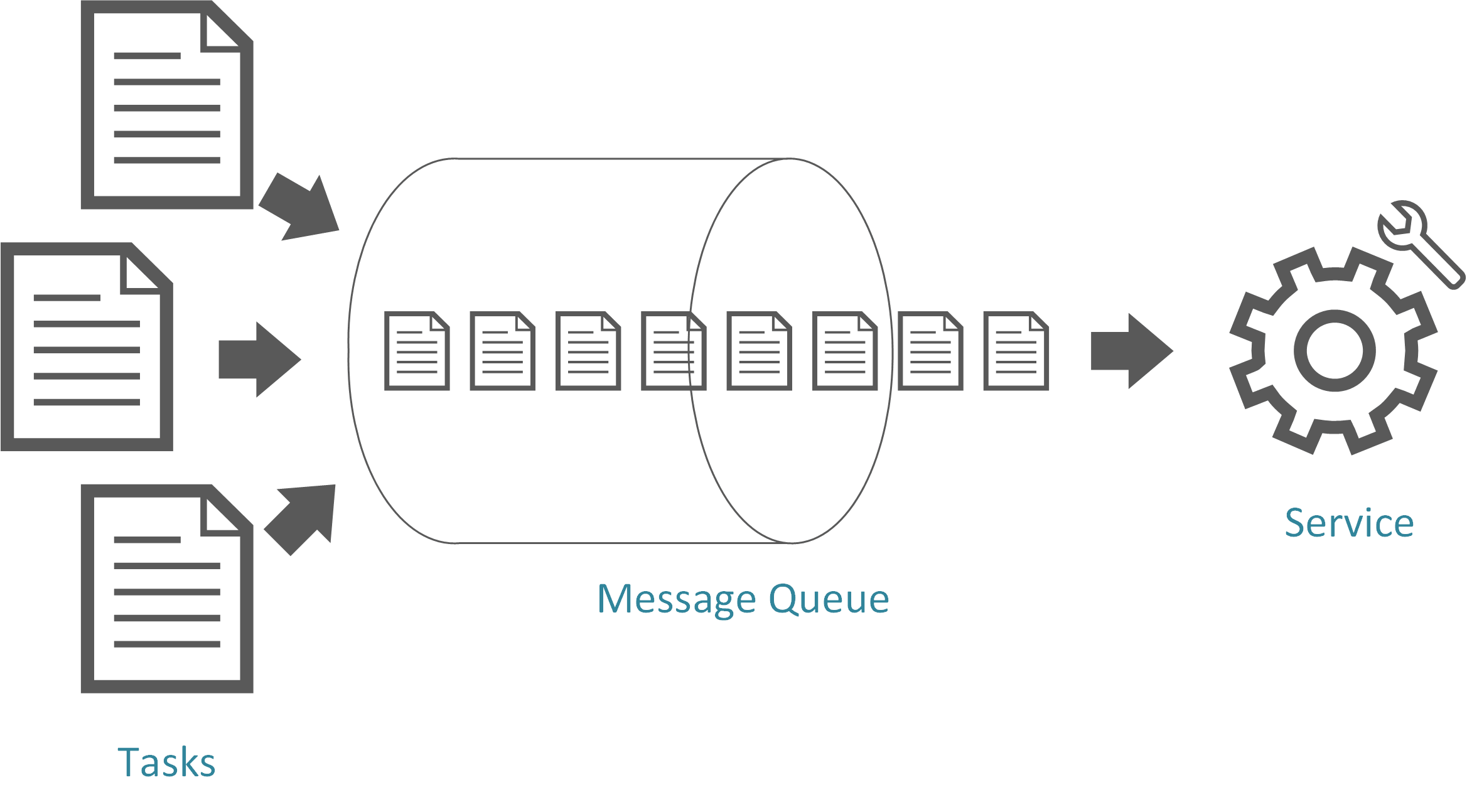

By introducing an Asynchronous Queue between a task and a service, you will be decoupling the task from the service. The service will be able to handle the messages at its own pace, irrespective of the volume of requests from concurrent tasks.

The queue acts as a buffer, storing the message until it is retrieved by the service. The service retrieves the messages from the queue and processes them.

Requests from a number of different tasks, which can be generated at a highly variable rate, can be passed to the service through the same message queue and will be processed at a constant rate that the service can work at.

Benefits

- Maximizing Availability: Delays or Downtime of the service does not affect the application generating the messages

- Maximizing Scalability: Number of Queues and Services can be varied to meet Demand. In a later blog post, I discussed the Competing Consumer Pattern, which helps in scaling your applications by running multiple instances of a service, each of which act as a message consumer from the load-leveling queue.

- Control Costs: You don't have to design your service to meet peak load, but rather average load

Considerations

The biggest consideration to keep it mind when implementing the Queue-Based Load Leveling Pattern is that an Asynchronous Queue is a one-way communication mechanism! If a task expects a reply from a service, it may be necessary to implement a mechanism that the service can use to send a response.

Message Brokers

Message Brokers are servers that take care of Queue Handling, Routing Messages, High Availability and Scaling out. There are many different message brokers and discussing which is best in which scenario would take a whole blog post, but a few famous ones are:

- RabbitMQ - Popular, Reliable, Excellent Documentation, Easy to Install, Configure and Use, Clutering, High Availability, Multi-Protocol, Runs on all major Operating Systems, Supports a huge number of developer platforms, Written in Erlang

- Azure Service Bus - The Go-To choice if you're already on Azure, High Throughput, Predictable Performance, Predictable Pricing, Secure, Scalable on Demand

- Apache Kafka - High-Throughput, Low-Latency, Uses Apache ZooKeeper for Distribution, Written in Scala and Java

- Amazon Simple Queue Service - The Go-To choice if you're already on AWS, Reliable, Simple, Flexible, Scalable, Secure, Inexpensive

A choice of Message Broker requires careful consideration as each has their pros and cons.